Miona Aleksic

on 18 January 2023

Containerization vs. Virtualization : understand the differences

Over the last couple of decades, a lot has changed in terms of how companies are running their infrastructure. The days of dedicated physical servers are long gone, and there are a variety of options for making the most out of your hosts, regardless of whether you’re running them on-prem or in the cloud. Virtualization paved the way for scalability, standardization and cost optimisation. Containerization brought new efficiencies. In this blog, we’ll talk about the difference between the two, and how each is beneficial.

What is virtualization?

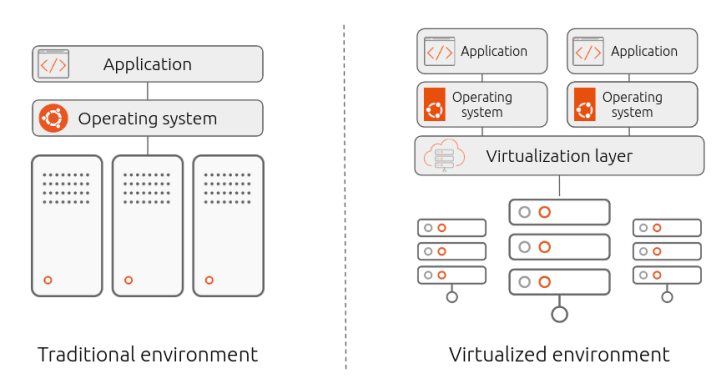

Back in the old days, physical servers functioned much like a regular computer would. You had the physical box, you would install an operating system, and then you would install applications on top. These types of servers are often referred to as ‘bare metal servers’, as there’s nothing in between the actual physical (metal) machine and the operating system. Usually, these servers were dedicated to one specific purpose, such as running one designated system. Management was simple, and issues were easier to treat because admins could focus their attention on that one specific server. The costs, however, were very high. Not only did you need more and more servers as your business grew, you also needed to have enough space to host them.

While virtualization technology exists since the 1960s, server virtualization started to take off in the early 2000s. Rather than having the operating system run directly on top of the physical hardware, an additional virtualization layer is added in between, enabling users to deploy multiple virtual servers, each with their own operating system, on one physical machine. This enabled significant savings and optimization for companies, and eventually led to the existence of cloud computing.

The role of a hypervisor

Virtualization wouldn’t be possible without a hypervisor (also known as a virtual machine monitor) – a software layer enabling multiple operating systems to co-exist while sharing the resources of a single hardware host. The hypervisor acts as an intermediary between virtual machines and the underlying hardware, allocating host resources such as memory, CPU, and storage.

There are two main types of hypervisors: Type 1 and Type 2.

- Type 1 hypervisors, also known as bare-metal hypervisors, run directly on the host’s hardware and are responsible for managing the hardware resources and running the virtual machines. Because they run directly on the hardware, they are often more efficient and have a smaller overhead than Type 2 hypervisors. Examples of Type 1 hypervisors include VMware ESXi, Microsoft Hyper-V, and Citrix XenServer.

- Type 2 hypervisors, also known as hosted hypervisors, run on top of a host operating system and rely on it to provide the necessary hardware resources and support. Because they run on top of an operating system, they are often easier to install and use than Type 1 hypervisors, but they might be less efficient. Examples of Type 2 hypervisors include VMware Workstation and Oracle VirtualBox.

Benefits and disadvantages

The biggest benefit of virtualization is, of course, the resulting cost savings. By running multiple virtual machines on a single physical server, you save money and space and are able to do more with less. Virtualization also allows for both better resource utilization as well as greater flexibility. Being able to run multiple VMs on a single server prevents some of your servers from standing idle. You can also easily create, destroy and migrate VMs between different hosts, making it easier to scale and manage your computing resources, as well as implement disaster recovery plans.

In terms of disadvantages, virtualization does increase the performance overhead, given that it introduces an additional layer between the host and the operating system. Depending on the workload, the decrease in performance can be noticeable, unless significant RAM and CPU resources are allocated. In addition, while there is cost saving in the long run, the upfront investment can be burdensome. Virtualization also adds some level of complexity to running your infrastructure, as you do need to manage and maintain both physical and virtual instances.

What is containerization?

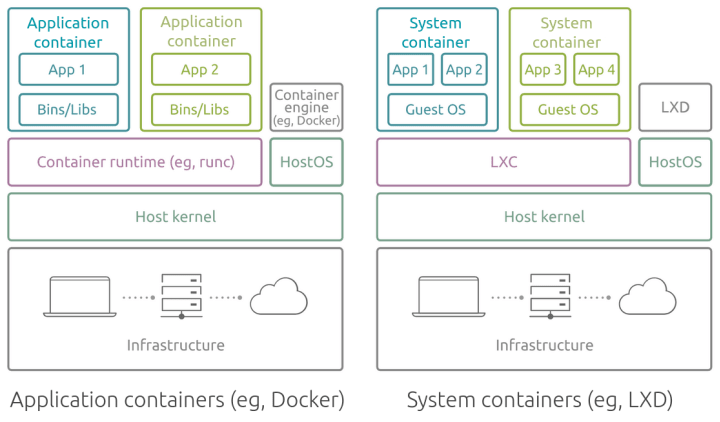

Containerization also allows users to run many instances on a single physical host, but it does so without needing the hypervisor to act as an intermediary. Instead, the functionality of the host system kernel is used to isolate multiple independent instances (containers). By sharing the host kernel and operating system, containers avoid the overhead of virtualization, as there’s no need to provide a separate virtual kernel and OS for each instance. This is why containers are considered a more lightweight solution – they require fewer resources without compromising on performance.

Application vs. system containers

It is relevant to note that there are different types of containers: application, system, embedded. They all rely on the host kernel, offering bare metal performance and no virtualization overhead, but they do so in slightly different ways.

- Application containers (such as Docker), also known as process containers, package and run a single process or a service per container. They are packaged along with all the libraries, dependencies, and configuration files they need, allowing them to be run consistently across different environments.

- System containers (as run by LXD), on the other hand, are in a way similar to a physical or a virtual machine. They run a full operating system and have the same behaviour and manageability as VMs, without the usual overhead, and with the density and efficiency of containers. Read our blog on Linux containers if you are curious about how they work.

Benefits and disadvantages

Containers are great due to their density and efficiency – you can run many container instances while still having the benefits of bare metal performance. They also allow you to deploy your applications quickly, consistently, and with greater portability given that all dependencies are already packaged within the container. Users have only one operating system to maintain, and they can get the most out of their infrastructure resources without compromising on scalability.

While resource efficiency and scalability are really important, running numerous containers can significantly increase the complexity of your environment. Monitoring, observability and operations of thousands of containers can be a daunting task if not set up properly. In addition, any kernel vulnerabilities in the host will compromise everything that is running in your containers.

How does this relate to the cloud?

Virtualization and containerization are both essential for any cloud. Regardless of the type of cloud (public, private, hybrid), the essential mechanism is that the underlying hardware, wherever it may be located, is used to provide virtual environments to users. Without virtualization technologies, there would be no cloud computing. When it comes to running containers in the cloud, you can typically run them directly on bare metal (container instances) as well as on regular compute instances which are technically virtualized.

What is the right choice for you?

Whether you should go for virtualization, containerization or a mix of the two really depends on your use case and needs. Do you need to run multiple applications with different OS requirements? Virtualization is the way to go. Are you building something new from the ground up and want to optimize it for the cloud? Containerization is the choice for you. A lot of the same considerations are needed when choosing your cloud migration strategy, and we delve deeper into that in our recent whitepaper.

Further Reading

Learn more about Canonical’s open source infrastructure solutions.

https://ubuntu.com/blog/lxd-virtual-machines-an-overview

https://ubuntu.com/blog/chiselled-containers-perfect-gift-cloud-applications

https://ubuntu.com/blog/open-source-for-beginners-dev-environment-with-lxd